Google sends a spider/robot to crawl your site to learn about your content, which tells them:

- What you’re about

- How popular you are

- What searches you’re relevant to

Google gets to know your site via crawlers that crawl your site frequently (usually daily or weekly, depending on numerous factors). This is why your “rankings” will fluctuate often.

Google utilizes all of the user data they have collected over the years, making them very insightful into user behavior to determine which web pages should be listed for which searches.

From there, Google takes that information and inputs it into search algorithms along with other websites and pages. Google has developed complex algorithms for what to show in search results for billions of searches based on their extensive user data. The search algorithms assign various weights to an array of factors depending on the search.

Google utilizes all of the user data they have collected over the years, making them very insightful into user behavior to determine which web pages should be listed for which searches. Google algorithms have become some of the most complex algorithms because they utilize so much user data from various sources like Google search, Chrome, Android phones, etc.

Google organizes user data from various sources and uses it to determine the most relevant results for a user’s query.

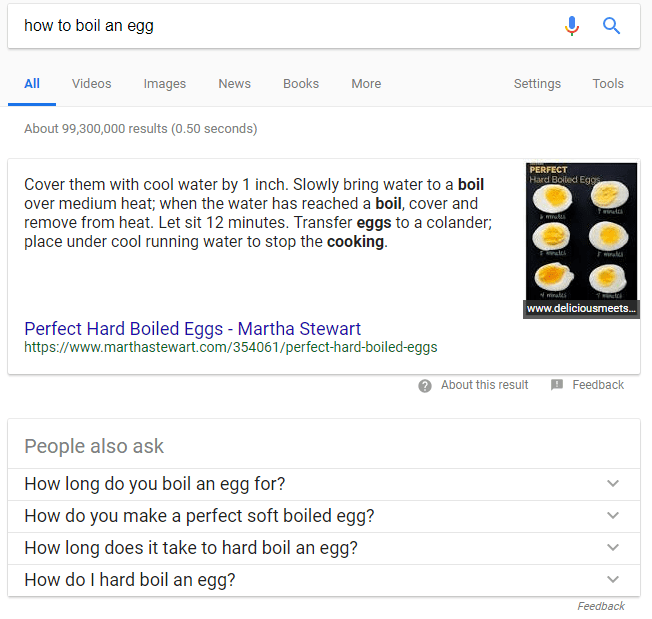

For example, for searches deemed “local” they would mix in Google Maps results, for searches deemed “simple questions” they might mix in knowledge graph and featured snippets results (the white box highlighting an answer you sometimes see in search results below). They are able to evolve quickly with changes in user behavior.